How I Studied LLMs in Two Weeks: A Comprehensive Roadmap

A day-by-day detailed LLM roadmap from beginner to advanced, plus some study tips

Understanding how LLMs operate under the hood is becoming an essential skill in machine learning. Whether you’re choosing the right model for your application, looking for a general knowledge of the field, or following discussions about LLMs and their potential to understand, create, or lead to AGI, the first step is understanding what they are.

In this article, I am going to share my learning experience, and the resources I found most helpful in learning about the fundamentals of LLMs in about 14 days, and how you can do this in a relatively short time. This roadmap could help you learn almost all the essentials:

· Why I Started This Journey

· My Learning Material

∘ 1. Build an LLM from Scratch

∘ 2. LLM Hallucination

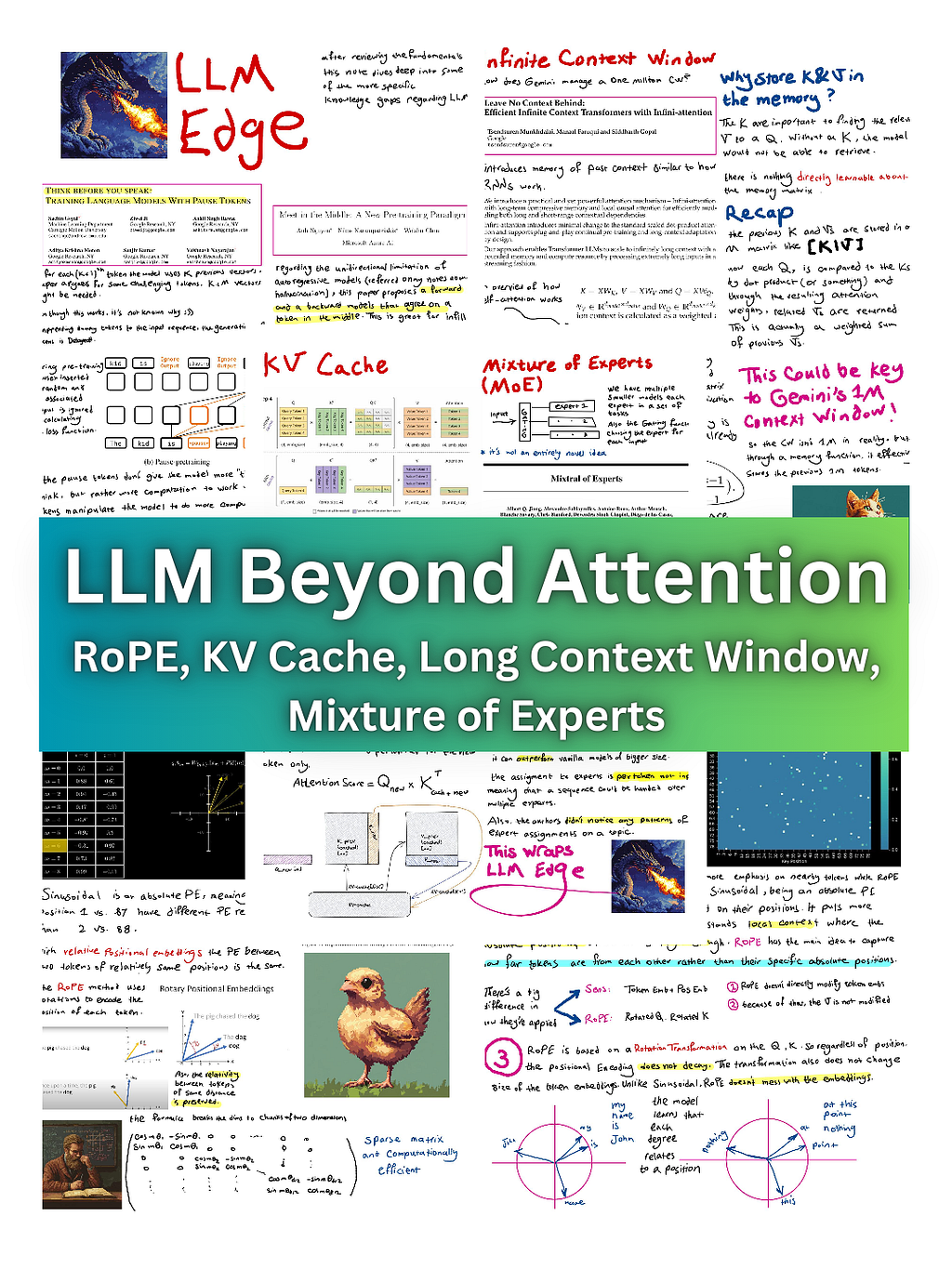

∘ 3. LLM Edge: beyond attention

· My Learning Resources

· Prerequisites To Begin

∘ Mathematics

∘ Programming and Frameworks

∘ Deep Learning Concepts

· Some Bonus Tips ✨

∘ Enjoy the Process

∘ Don’t Follow My Roadmap

∘ Don’t Finish Everything

· Wrap Up

Why I Started This Journey

I am obsessed with going deeper into concepts, even if I already know them. I could already read and understand the research on LLMs, I could build agents or fine-tune models. But it didn’t seem enough to me.

I wanted to know how large language models work mathematically and intuitively, and why they behave the way they do.

I was already familiar with this field, so I knew my knowledge gaps exactly. The fact that I have a background in machine learning and this field specifically has helped me big time in doing this in two weeks, otherwise this would take more than a month.

My Learning Material

I wanted to do this learning journey not just for LLMs, but many other topics in my interest (Quantum Machine Learning, Jax, etc.) So to document all this and keep it tidy, I started my ml-retreat GitHub repository. The idea was that sometimes we need to sit back from our typical work and reflect on the things we think we know and fill in the gaps.

The repository was received much more positively than I expected. At the time of writing this article, it has been starred ⭐ 330 times and increasing. There were many people out there looking for something I noticed, a single comprehensive roadmap of all the best resources out there.

All of the materials I used so far are free, you don’t need to pay anything.

I studied LLMs majorly in three steps:

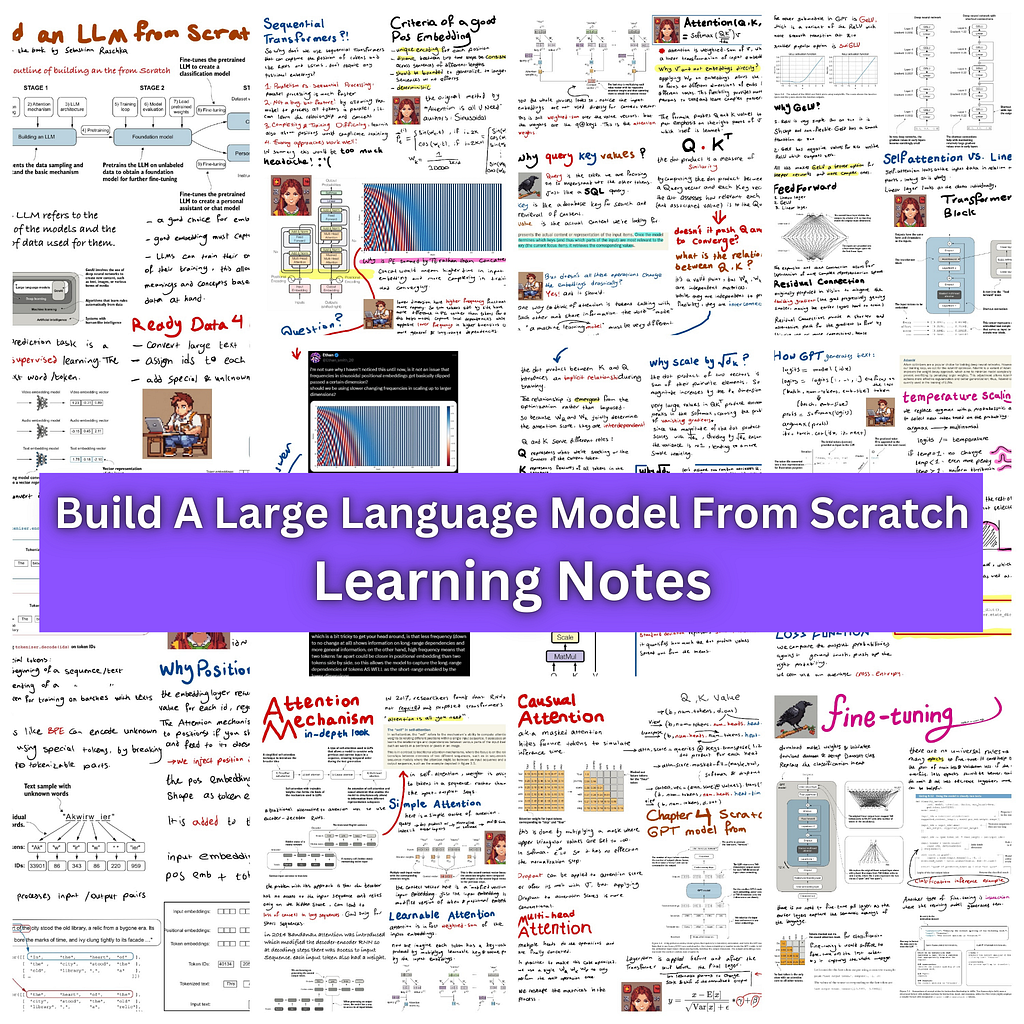

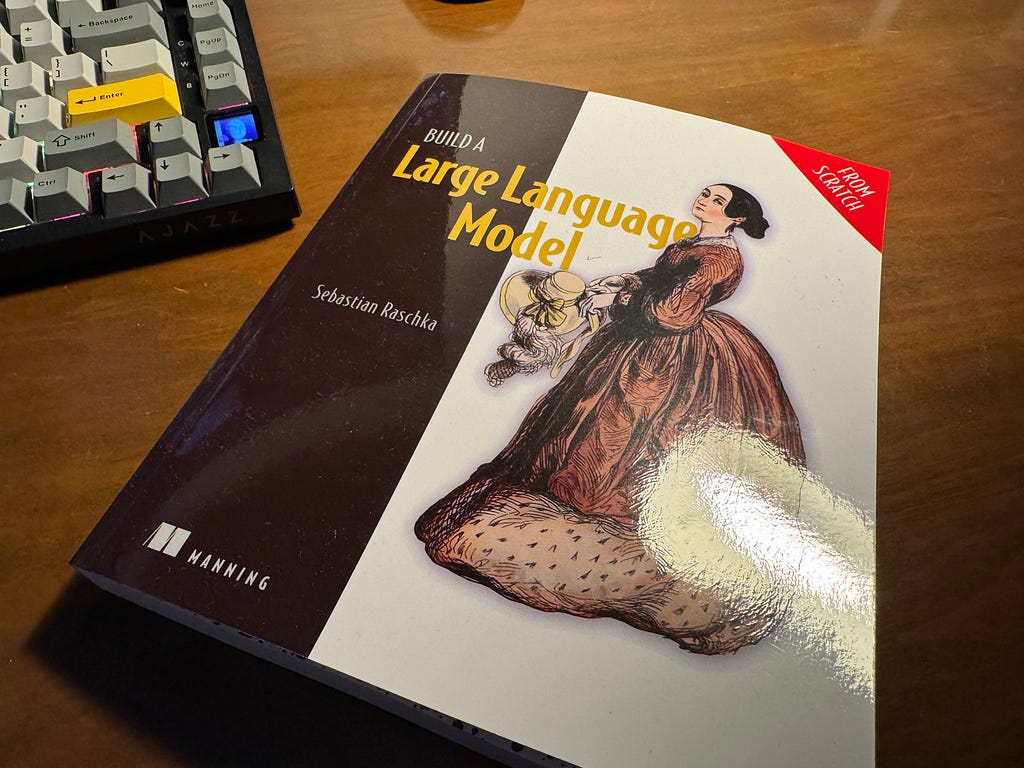

1. Build an LLM from Scratch

This would conclude the fundamentals of language models. Token and positional embeddings, self-attention, transformer architectures, the original “Attention is All You Need” paper and the basics of fine-tuning. While I have used numerous resources for each topic, a crucial resource for this was Build a Large Language Model (From Scratch) by Sebastian Raschka (you can read it for free online). The book beautifully uncovers each of these topics to make them as accessible as possible.

The Challenge of this stage in my opinion was self-attention — not what it is, but how it works. How does self-attention map the context of each token in relation to other tokens? What do Query, Key, and Value represent, and why are they crucial? I suggest taking as much time as needed for this part, as it is essentially the core of how LLMs function.

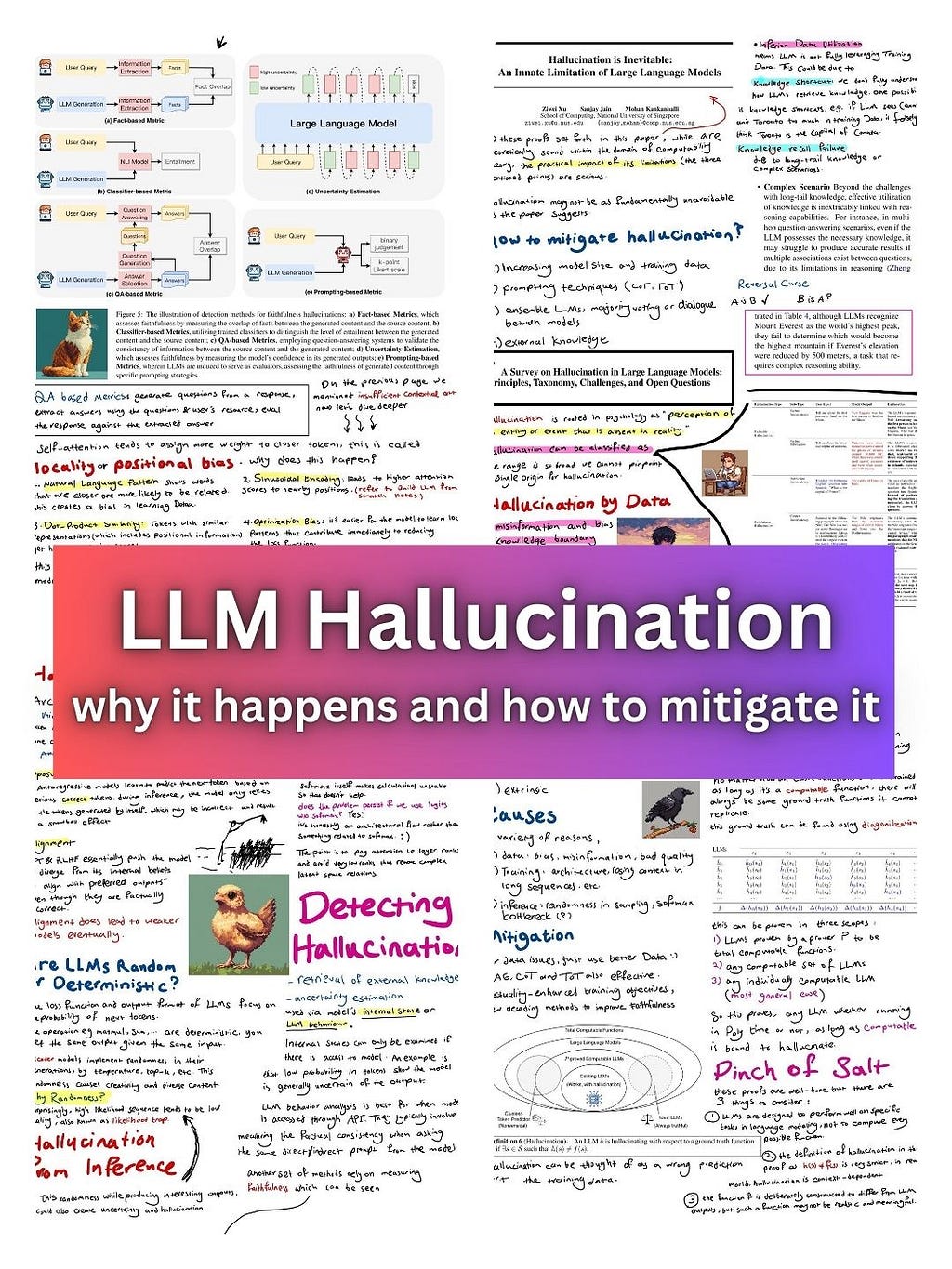

2. LLM Hallucination

For the second part of my studies, I wanted to understand what hallucination is and why LLMs hallucinate. This was more of a personal question lurking in my mind, but it also enabled me to understand some aspects of the language models.

I learned about positional bias where LLMs favor the closer tokens and forget about the tokens further away. I learned about exposure bias which implies in the inference phase, predicting a wrong token could derail the generation process for the next tokens like a snowball effect. I also learned how Data, Training, and Inference each contribute to this hallucination dilemma.

Hallucination is a big pain in the head for both researchers and those who build applications with LLM. I strongly suggest you take the time to study why this happens and also methods to mitigate it.

3. LLM Edge: beyond attention

The last two stages show you how LLMs work. There are some techniques however that are not so basic but have become mainstream in building LLMs. So I studied:

- Pause tokens that give LLMs more time to “think”.

- Infini-attention which allows LLMs to have very big context windows (like Gemini’s 1M context window) by leveraging a sort of memory of previous tokens.

- RoPE (Rotary Positional Embedding), a relative positional embedding method used in Llama and many other LLMs that gives the benefit of attending to tokens far away in the sequence.

- KV Cache to speed up generation by eliminating calculations repeated in generating previous tokens.

- Mixture of Experts (MoE), which incorporates several smaller LLMs instead of a big one. This technique was popularized in language models by Mistral. Their 8 models of size 7B could outperform Llama 2 70B on some tasks, so it really is impressive!

To recap these subjects, I studied the architecture and code of Meta’s Llama which encapsulates many of the subjects I mentioned. The resource for this is again, on my repository.

My Learning Resources

I didn’t use a single resource to learn these subjects.

For the basics of LLMs, I used Build a Large Language Model (From Scratch).

I also read many papers. Reading papers could seem difficult, but they add so much value. Especially those that first proposed a technique (like the original transformer paper) and also the survey papers that digest many papers and give you the TL;DR.

YouTube videos are especially very helpful. I watched YT as my first step in studying many of these materials, just to warm myself up and have a perspective. I highly suggest watching Andrej Karpathy’s playlist which contains mostly videos about language modeling and LLMs. What is better than having a genius explain to you LLMs from zero to hero!

Prerequisites To Begin

Learning about LLMs is not complex, but it’s not exactly beginner-friendly either. A foundational understanding of machine learning and related subjects will make the learning process smoother.

Mathematics

- Linear Algebra: Vectors and matrices, matrix multiplication

- Probability and Statistics: Basics of probability, random variables and distributions, expectation and variance, maximum likelihood estimation (MLE)

- Calculus: Differentiation and integration (especially for backpropagation), partial derivatives (for gradient-based optimization)

- Optimization: Gradient descent, stochastic gradient descent (SGD), advanced optimizers (e.g. Adam)

Programming and Frameworks

- Python: Familiarity with libraries such as NumPy and Pandas

- Deep Learning Frameworks: TensorFlow or PyTorch, familiarity with model training, debugging, and evaluation

Deep Learning Concepts

- Understanding of perceptrons, activation functions, and layers. Backpropagation and gradient descent. Loss functions (Cross-Entropy, MSE)

- Convolutional Neural Networks (CNNs) (Optional but helpful): Useful for understanding how layers in models operate

Naturally, you may not know some of these. But it doesn’t mean you shouldn’t start the learning. Just know that if you struggle at certain times, it’s expected, and you come back to learn them in more depth later on.

Some Bonus Tips ✨

Some things I learned along the way or could help you in your study:

Enjoy the Process

I did mention I learned these subjects in two weeks. They’re not super complex, but I only mentioned time to emphasize this is not too difficult to do. I suggest that you don’t care about learning these materials by a strict deadline. Of course, when I started this I had no intention of doing this in 14 days. I just did. But it could last 1 month easily and I would have no problem, as long as I could have the pleasure of finding things out.

Don’t Follow My Roadmap

It might sound strange, but my learning path is my learning path. Don’t feel like you need to follow my exact roadmap. It did fantastic for me, but there’s no guarantee it would be likewise for you.

Learning is a very personal experience. What you learn is a product of what you know, and what you want to know. This is different for anybody. So please don’t follow my roadmap, but simply pick the good parts you are interested in. And this is the same case for any other roadmaps you see and hear out there. No single book, resource, or roadmap is the best, so don’t limit yourself to one single thing.

Don’t Finish Everything

When you pick up a book, YouTube video, or paper to study any of these materials, you aren’t sworn by blood to finish it. You’re just there to pick up what you came for and leave. Papers especially can be so time-consuming to read. So here’s my advice:

Before reading any of these materials, identify the question that you have in your mind, and look specifically for the answer. This saves you from wasting your time on unrelated content that may be great, but not relevant.

Wrap Up

I am blown away by the community’s support both for my repository and me sharing my learning path. I will continue to study more subjects, Omni models, ViT, GNN, Quantum Machine Learning, and many more are on my list. So don’t miss out on my X posts where I share the digest of my notes.

Also, my GitHub repository ml-retreat is where I shared all of the materials I have shared so far:

GitHub - hesamsheikh/ml-retreat: intermediate to advanced AI learning path

Thank you for reading through this article. If you’re interested in a further read, here are my suggestions :)

How I Studied LLMs in Two Weeks: A Comprehensive Roadmap was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Datascience in Towards Data Science on Medium https://ift.tt/FuqZWQB

via IFTTT